Azure Open AI and Microsoft Fabric

Get ready for data enrichement in Microsoft Fabric

Azure OpenAI is fun and exciting and we can use it to do amazing stuff. In combination with Spark on Microsoft Fabric or Azure Synapse Analytics, we can transform and generate large amounts of text data and make use of OpenAI’s flexibility in defining the transformation. The SynapseML library that comes pre-installed on all Synapse Spark pools and Fabric workspaces includes an OpenAI module that allows you to perform OpenAI transformations on spark dataframes, enabling OpenAI at scale. Azure OpenAI is fun and exciting and we can use it to do amazing stuff. In combination with Spark on Microsoft Fabric or Azure Synapse Analytics, we can transform and generate large amounts of text data and make use of OpenAI’s flexibility in defining the transformation.

Together with Floris Berends we had a look into the possibilities and wrote the post below

Requirements

To run this example you need to have:

- An Azure OpenAI service

- A model deployment

- A Microsoft Fabric workspace Alternatively, a Synapse Analytics workspace

- A Spark Notebook

Extracting text fields from raw social media posts

Let’s say we are scraping social media posts and are interested in some of the details. Usually, scraping text fields results in some pretty messy data. For this example, we are using the Scikit-Learn newsgroups open dataset.

Set up a Spark Dataframe

In order to load the open dataset into a spark dataframe, we first load it into a pandas dataframe. Of course if you are using your own data, you can load the data from anywhere, as long as it fits into a spark dataframe

import pandas as pd

from sklearn.datasets import fetch_20newsgroups

newsgroups = fetch_20newsgroups(subset="train", categories=['talk.politics.misc'])

pd_df = pd.DataFrame(newsgroups["data"], columns=["data"])

df = spark.createDataFrame(pd_df)Set up our parameters

To prepare the OpenAI transformation, we need to provide the API with a number of connection and configuration parameters. These include the Azure OpenAI service name, the name of the model deployment, and a prompt that will specify our transformation. The parameters can be found in the Azure Portal, on your Azure OpenAI resource. If you have not yet deployed a model, do this now. Note that the prompt specifies what we want the model to do, but also specifies the format in which we want the model to respond. This is crucial in getting reliable results from the model and this is what enables us to use the transformation as part of a pipeline.

openai_service_name = "<YOUR SERVICE NAME>"

openai_deployment_name = "<YOUR DEPLOYMENT NAME>"

openai_key = "<YOUR SERVICE KEY>"

source_content_column = "data"

system_prompt = """

You will read the raw text of an e-mail and extract the senders e-mail

address and subject from the text. You will also list the topics of the email, provide a short one-sentence summary, and output the sentiment of the email. Ensure that the sentiment is one of the following: negative, neutral, positive.

Your response will be in the following format

{{

"EMAILADDRESS": "",

"SUBJECT": "",

"SUMMARY": "",

"SENTIMENT": "",

"TOPICS: []

}}

"""Set up the prompt column

Because OpenAI needs a prompt in order to generate a completion, we need to setup a prompt column that includes both the instruction (system_prompt) we set up earlier and our data. The way that Azure OpenAI chat completions work, is that you can provide the ‘chat history’ as a message column. This column is what we will use as input for the transformation. Additionally, Azure OpenAI chat completion messages include a ‘role’ parameter. The role specifies who sent the message. In a normal chat interaction, there are 2 roles: the user and the assistant (i.e. the model). However, it is possible to provide a ‘system’ message that will instruct the model how to behave. We will use a ‘system’ message in order to instruct the model on how to transform our data. In order to do this, we need to set up the prompt column in the following way:

- A message with the ‘system’ role and our instruction as content.

- A message with the ‘user’ role and our data as content.

import pyspark.sql.functions as F

from pyspark.sql.types import ArrayType, StructType, StructField, StringType

df = df.withColumn("prompt", F.udf(

lambda system_prompt, content: [{"name":"system", "role":"system", "content": system_prompt},{"name":"user", "role":"user", "content": content}],

ArrayType(

StructType([

StructField("name", StringType(),False),

StructField("role", StringType(),False),

StructField("content", StringType(),False)

]

)

)

)(F.lit(system_prompt),F.col(source_content_column)))Calling the Azure OpenAI API

Now that we have the input dataframe with the data and prompt just how we want it, we can set up the call to the Azure OpenAI API. Note that Spark will not immediately execute the transformation, but will simply setup the plan for the dataframe. The API will only be called when we actually need the data (e.g. when we save or display the dataframe).

from synapse.ml.cognitive import OpenAIChatCompletion

completion = (

OpenAIChatCompletion()

.setSubscriptionKey(openai_key)

.setDeploymentName(openai_deployment_name)

.setUrl(f"https://{openai_service_name}.openai.azure.com/")

.setMessagesCol("prompt")

.setErrorCol("error")

.setOutputCol("output")

.limit(10)

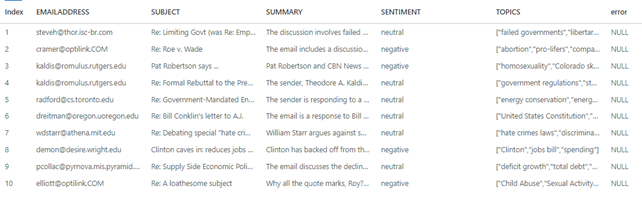

)Transforming the results

The OpenAIChatCompletion mehthod simply puts the completion results into the output column, but we want to have the results in separate columns. Before we can do this we need to define the output schema.

output_columns = "EMAILADDRESS,SUBJECT,SUMMARY,SENTIMENT,TOPICS"

prompt_schema = StructType(

[StructField(col, StringType(), True)

for col in output_columns.split(",")

])

df_result = completion.transform(df.limit(10)).withColumn(

"response",

F.from_json(

F.col("output.choices.message.content").getItem(0)

,prompt_schema)

).select("response.*","error")Displaying and Verifying the results

There are a number of things that can go wrong. For any row, errors returned by the API will be put into the error column that you provided by .setErrorCol. We can display the dataframe to inspect the results:

display(df_result)

Final

It might seem that this setup is so versatile that you can use it to apply any transformation you desire on any column in any dataset. Although this might not be far from the truth, there are a couple of things you need to consider:

- Cost: Azure OpenAI transformations are more expensive then those that do not rely on external APIs (e.g. Spark Native transformation like map(), flatten(), explode(), or using regular expressions and the like).

- Complexity: This example applies a transformation with a simple output schema. It might very well be the case that asking a LLM to output data in a very complex schema will not turn out well.

- Language: This example applies a transformation that is primarily a language based transformation: extracting and summarizing information that is available as natural language. Using LLMs to apply math-based, logic-based, or code-based transformations might not show reliable results.

The main take-away is that using Azure OpenAI to transform text-fields though natural language operations like summarization, description and extraction can be done fast and reliable. We are looking forward to seeing where this technology will take us.

Learn more

Data science in Microsoft Fabric

0 Comments