How to use the PySpark executor in Notebooks in Microsoft Fabric?

In this blog post, we will explore how to use the PySpark executor in Notebooks in Microsoft Fabric to leverage the power of Spark and achieve high concurrency, flexibility and scalability.

What is the PySpark executor?

The PySpark executor is a built-in kernel that allows you to run Python code on top of the remote Fabric Spark compute. This means that you can use the familiar PySpark API to interact with Spark and access its rich functionality, such as Spark SQL, Spark MLlib, Spark Streaming, and more. The PySpark executor also supports multiple languages in one notebook, so you can switch between Python, Scala, SQL, HTML, and R using magic commands.

The PySpark executor is designed to handle concurrent and parallel execution of notebook cells. This means that you can run multiple cells at the same time, without waiting for the previous ones to finish. This can improve the performance and efficiency of your notebook sessions, especially when you have long-running or complex tasks. The PySpark executor also allows you to share the same Spark session across multiple notebooks, which can reduce the overhead of creating and managing Spark contexts.

How to use the PySpark executor in Notebooks in Microsoft Fabric?

To use the PySpark executor in Notebooks in Microsoft Fabric, you need to do the following steps:

- Create a Fabric notebook. This is a web-based interactive environment where you can write and run code, visualize data, and document your analysis. You can create notebooks from scratch or use templates and samples provided by Microsoft Fabric.

- Set the primary language to PySpark (Python). This will make the PySpark executor the default kernel for your notebook. You can also use multiple languages in one notebook by specifying the language magic command at the beginning of a cell, such as %%pyspark, %%spark, %%sql, %%html, or %%sparkr.

- Write and run your PySpark code. You can use the PySpark API to interact with Spark and perform various data engineering and data science tasks. You can also use other Python libraries and packages, such as Pandas, NumPy, Matplotlib, and more. You can install and manage Python libraries using the mssparkutils package, which is a built-in utility that helps you perform common tasks in Fabric notebooks.

- Monitor and troubleshoot your notebook sessions. You can use the Fabric monitor to view the status and metrics of your Spark pool and notebook sessions. You can also use the Fabric debugger to debug your PySpark code and identify errors and issues.

Example: Using the PySpark executor to run multiple notebooks in parallel

In this example, we will use the PySpark executor to run multiple notebooks in parallel and collect their results. This can be useful when you have a set of notebooks that perform similar or related tasks, such as data preparation, feature engineering, model training, or evaluation. By running them in parallel, you can save time and resources, and also compare and analyze their outputs.

To run multiple notebooks in parallel, we will use the ThreadPoolExecutor class from the concurrent.futures module in Python. This class provides a high-level interface for asynchronously executing callables using a pool of threads. We will also use the mssparkutils.notebook.runNotebook function, which allows you to run a notebook and return its output as a JSON object.

The following cell needs to be defined in your Notebook as a Parameters cell:

path=[

{"path":"NB_Bronze_Silver","params":{"source_schema": "Application",

"source_name": "People",

"sourceLakehouse": "xxxxxxxxxxxxxxxxxxxxxxx",

"target_schema": "Application",

"target_name": "People",

"targetLakehouse": "xxxxxxxxxxxxxxxxxxxxxxx",

'useRootDefaultLakehouse': True}},

{"path":"NB_Bronze_Silver","params":{"source_schema": "Application",

"source_name": "DeliveryMethods",

"sourceLakehouse": "xxxxxxxxxxxxxxxxxxxxxxx",

"target_schema": "Application",

"target_name": "DeliveryMethods",

"targetLakehouse": "xxxxxxxxxxxxxxxxxxxxxxx",

'useRootDefaultLakehouse': True}}

]

#Define Notebook to be executed

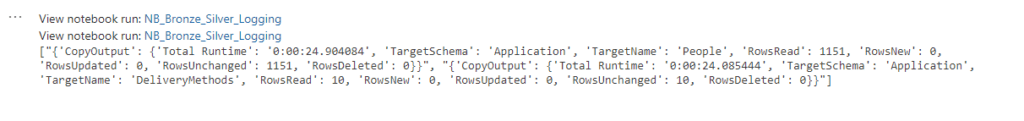

#Define Parameters to be passed throughThe following code snippet shows how to use the PySpark executor to run multiple notebooks(above code snippet) in parallel and collect their results. We assume that we have a list of notebook names that we want to run, and a function called notebook_error_handle that handles any errors or exceptions that may occur during the execution.

# Import the modules

from concurrent.futures import ThreadPoolExecutor

from notebookutils import mssparkutils

timeout = 90

# Define the list of notebooks to run

notebooks = path

# Define a function to handle errors and exceptions

def notebook_error_handle(notebook):

try:

# Run the notebook and return the output as a JSON object

result = mssparkutils.notebook.run(notebook["path"], timeout, notebook["params"])

return result

except Exception as e:

# Print the error message and return None

print(f"Error running {notebook}: {e}")

return None

# Create an empty list to store the results

results = []

# Create a thread pool executor with the same number of threads as notebooks

with ThreadPoolExecutor(max_workers=len(notebooks)) as executor:

# Submit the notebook tasks to the executor and store the futures

notebook_tasks = [executor.submit(notebook_error_handle, notebook) for notebook in notebooks]

# Iterate over the futures and append the results to the list

for notebook_task in notebook_tasks:

results.append(notebook_task.result())

# Print the results

print(results)

Conclusion

In this blog post, you have learned how to use the PySpark executor in Notebooks in Microsoft Fabric to achieve high concurrency, flexibility and scalability. You have seen how to use the ThreadPoolExecutor class to run multiple notebooks in parallel and collect their results. Ihope that this post has given you some insights and tips on how to leverage the power of Spark an concurrency in Microsoft Fabric. Happy coding!

Stay tuned

In my next blogpost I will explain the working of How to use mssparkutils.notebook.runMultiple in Notebooks in Microsoft Fabric?

0 Comments