Scale your SQL Pool dynamically in Azure Synapse

Scale your Dedicated SQL Pool in Azure Synapse Analytics

In my previous article, I explained how you can Pause and Resume your Dedicated SQL Pool with a Pipeline in Azure Synapse Analytics. In this article I will explain how to scale up and down a SQL Pool via a Pipeline in Azure Synapse Analytics. This is actually a necessary functionality during your Data Movement Solutions. In this way you can optimize costs.

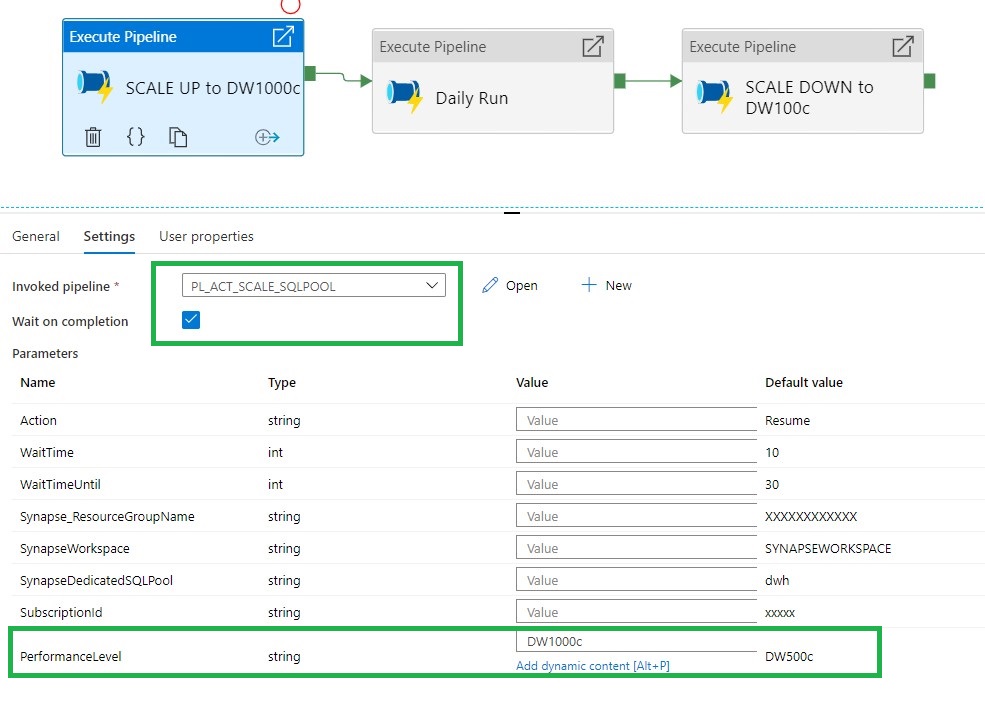

The Pipeline can be added before and after your Nightly Run.

As a quick resume from the previous article, a SQL Pool can have different statuses:

- Pausing: SQL Pool is Pausing and we cannot change the status.

- Resuming: SQL Pool is Resuming, the SQL Pool starting and during this process and we cannot change the status.

- Scaling: SQL Pool is Scaling, the SQL Pool is scaling to a different compute level and during this process we cannot change the status.

- Paused: SQLPool is Paused, we can now change the status.

- Online: SQLPool is Online, we can now change the status.

To allow the Synapse workspace to call the REST API we need to give the Synapse workspace access to the SQL Pool. In the Access control (IAM) of the SQL Pool assign the contributor role to your Synapse Workspace.

Build Pipeline

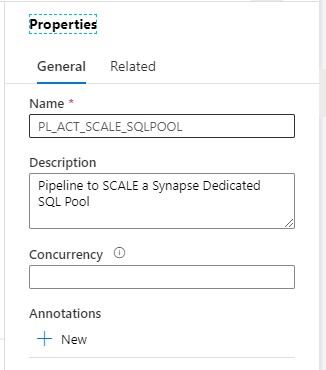

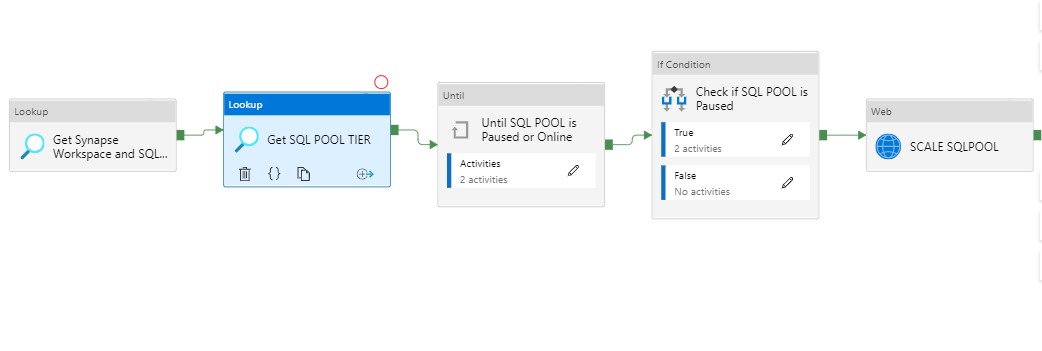

Clone the Pipeline PL_ACT_RESUME_SQLPOOL and rename it to PL_ACT_SCALE_SQLPOOL.

Change the description of the Pipeline, ‘Pipeline to SCALE a Synapse Dedicated SQL Pool‘

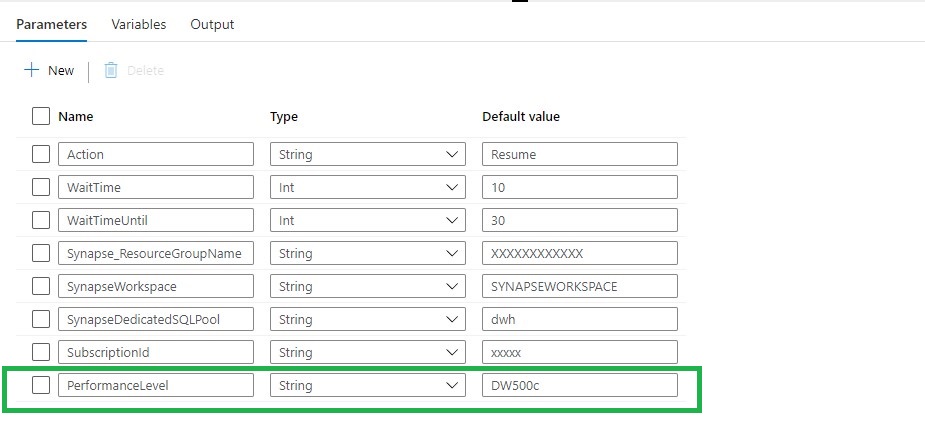

Add the PerformanceLevel parameter to the Parameters of the Pipeline:

Action: RESUME(Leave this on RESUME, if we want to SCALE the SQL Pool must be Online)

WaitTime: Wait time in seconds before the Pipeline will finish

WaitTimeUntil: Wait time in seconds for the retry process

Synapse_ResourceGroupName: Name of the ResourceGroup of the used Synapse Workspace

SynapseWorkspace: SynapseWorkspace

SynapseDedicatedSQLPool: Name of the dedicated SQL Pool

SubsriptionId: SubscriptionId of Synapse Workspace

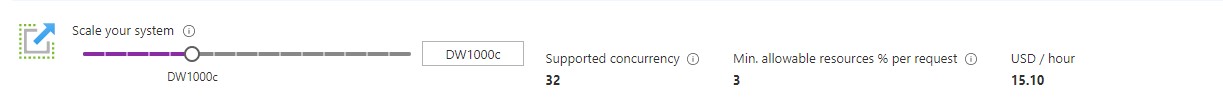

PerformanceLevel: The Database Performance level (DW100c, DW200c, DW300c, DW400c DW500c, DW1000c, DW1000c, DW1500c, DW2000c, DW2500c, DW3000c, DW5000c, DW6000c, DW7500c, DW10000c, DW15000c, DW30000c)

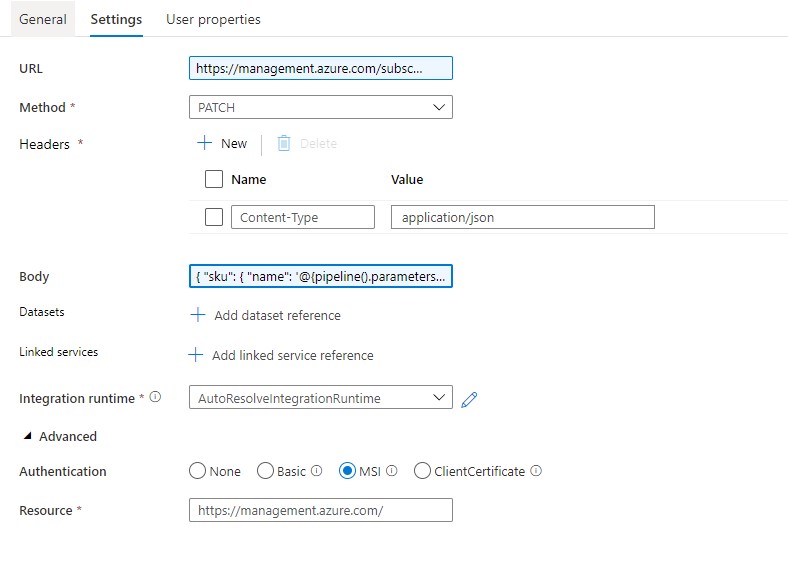

Web Activity

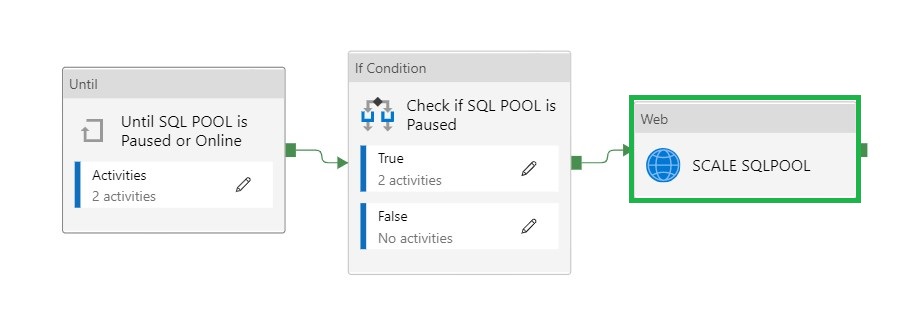

We leave the first two activities as is. The Pipeline can only continue when the status is Paused or Online and not one of the other statuses. When the SQL Pool is Paused, the second activity will Resume the SQL Pool.

To Scale the SQL Pool we need add a new Web Activity.

Name = SCALE SQLPOOL

URL= https://management.azure.com/subscriptions/XXX/resourceGroups/XXX/providers/Microsoft.Synapse/workspaces/XXX/sqlPools/XXX/?api-version=2019-06-01-preview

The <xxx> we need to replace with the Pipeline Parameters. The final Result will be:

https://management.azure.com/subscriptions/@{pipeline().parameters.SubscriptionID}/resourceGroups/@{pipeline().parameters.Synapse_ResourceGroupName}/providers/Microsoft.Synapse/workspaces/@{pipeline().parameters.SynapseWorkspace}/sqlPools/@{pipeline().parameters.SynapseDedicatedSQLPool}/?api-version=2019-06-01-preview

Method = PATCH

Headers = Name = Content-Type Value= application/json

Body = { “sku”: { “name”: ‘@{pipeline().parameters.PerformanceLevel}’ } }

Resource =https://management.azure.com/

Please feel free to download the Pipeline code here.

DAILY RUN

Add the above Pipeline as a Start Pipeline before your Daily run and Scale up to the desired Performance Level. When the Daily run is finished you Scale Down to a lower level or can you add the Pipeline to Pause the SQL Pool.

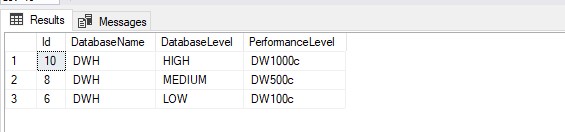

Metadata

If you’re already using a database where you store your Meta Data, you can create a table where you store the desired Performance Level The only thing you need to do is adding a Lookup Activity to get the parameters from your database and replace the parameters with the output from the lookup activity.

[sql] CREATE TABLE [configuration].[Database_Level]( [Id] [int] IDENTITY(1,1) NOT NULL, [DatabaseName] [varchar](30) NULL, [DatabaseLevel] [varchar](10) NOT NULL, [PerformanceLevel] [varchar](10) NOT NULL, CONSTRAINT [PK_Pipeline_ExecutionLog] PRIMARY KEY CLUSTERED ( [Id] DESC )WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF) ON [PRIMARY] ) ON [PRIMARY] [/sql]

A SQL Pool(Former SQL DW)

A SQL Pool(Former SQL DW) linked to a SQL (Logical) Server has a slightly different approach.

Use the settings below to create a Pipeline to Scale the SQL Pool.

Action: RESUME

WaitTime: Wait time in seconds before the Pipeline will finish

WaitTimeUntil: Wait time in seconds for the retry process

SQLServer_ResourceGroupName: Name of the ResourceGroup of the used SQL(Logical) Server

SQLServer: SQL(Logical) Server name

SQLServerDedicatedSQLPool: Name of the dedicated SQL Pool

SubsriptionId: SubscriptionId of Synapse Workspace

DatabaseTier: The Database Performance level (DW100c, DW200c, DW300c, DW400c DW500c, DW1000c, DW1000c, DW1500c, DW2000c, DW2500c, DW3000c, DW5000c, DW6000c, DW7500c, DW10000c, DW15000c, DW30000c)

Body: { “requestedServiceObjectiveName”: { “name”: ‘@{pipeline().parameters.PerformanceLevel}’ } }

Hopefully this article has helped you a step further. As always, if you have any questions, leave them in the comments.

0 Comments