Connecting Event Hubs in Microsoft Fabric

Connecting Azure Event Hubs with Eventstream in Microsoft Fabric

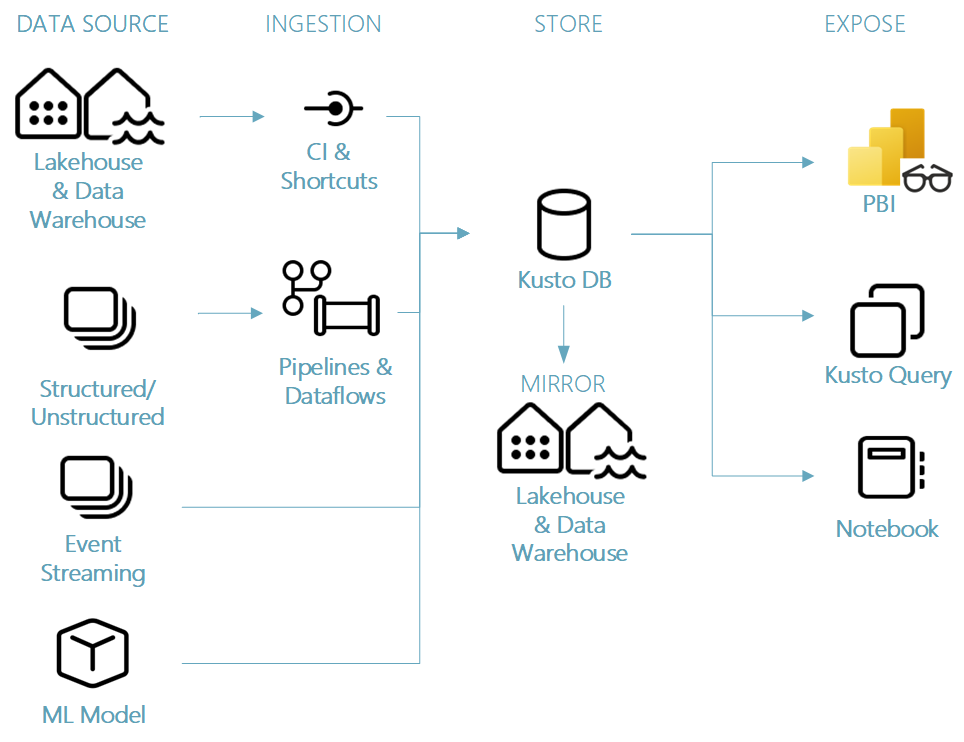

In my previous blog I did give you an introduction of the possibilities of Real-Time Analytics in Microsoft Fabric.

In this blog we will have a closer look into how we can connect data from one of our existing Azure Event Hubs.

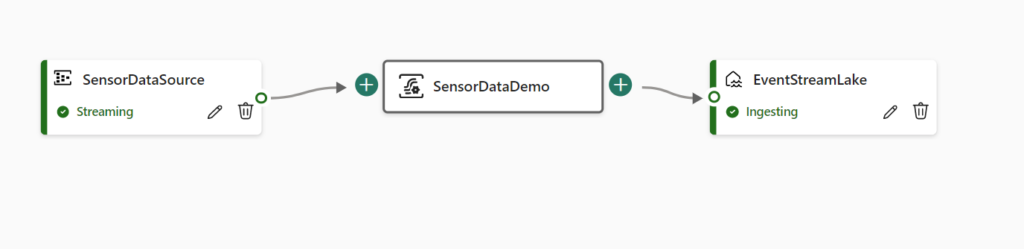

Looking to the above picture, you see an end to end workflow for a Real-Time Analytics scenario. We can directly see which Fabric Artifact we need to use to build the solution. To build the complete solution below took me maximum 20 minutes,.

Loading data from Azure Event Hubs to Lakehouse

Requirements:

- An existing Azure Event Hub.

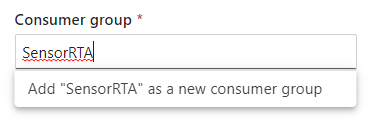

- New consumer group(never you use an existing). If you use an existing consumer group then it can happen that the event hub stop sending messages to your existing environment.

- Fabric Workspace

Note:

Adding a consumer group is not available in the Basic tier but only in the Standaard Tier.

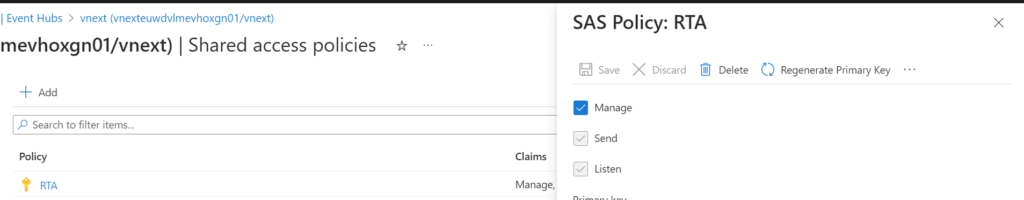

Creating a Shared Access Policy on the Event Hub

Create a new Shared Access Policy on the Event Hub, with the manage option enabled.

Note down the SAS Policy name and the Primary Key. We will need this later to setup the Connection in Microsoft Fabric.

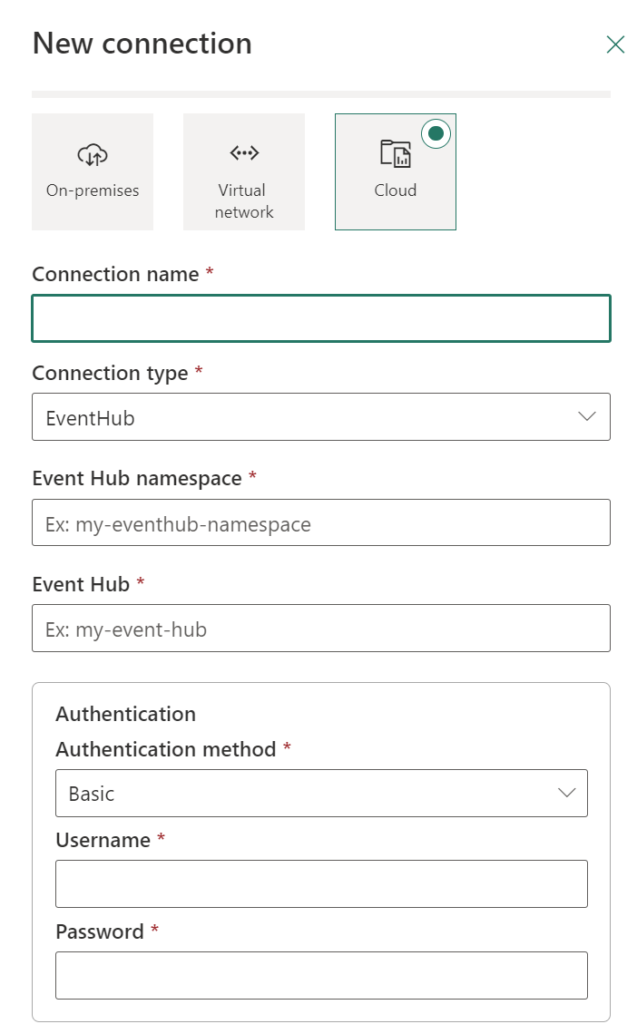

Create a Data Connection in Microsoft Fabric

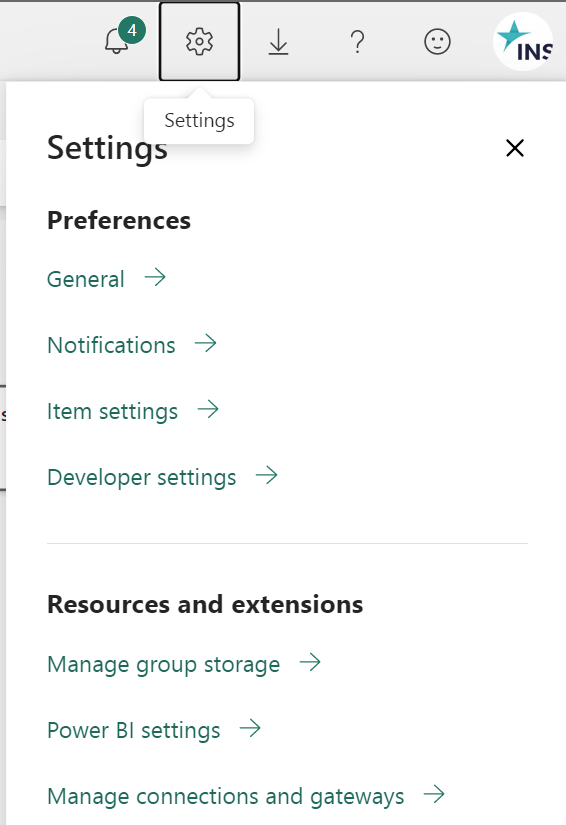

In the menu bar(top right) open the settings toggle and open the Manage Connection option.

Search for Event Hub.

| Connection name | Name of the Connection |

| Event Hub Namespace | https://xxxxxxx.servicebus.windows.net:443/ |

| Authentication |

Username: Name of the SAS Policy Password: Primary Key of the SAS Policy |

Now we have created a connection to our Azure Event Hub, we’re ready to receive our streaming data and to setup an Eventstream.

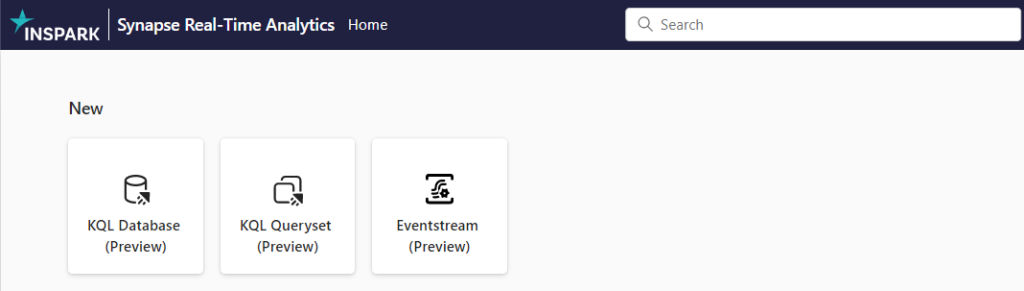

So lets start to open the the Synapse Real-Time Analytics Experience. This can be found in the left bottom corner of your Microsoft Fabric environment.

Fabric Capacity

Make sure you have a Microsoft Fabric or Power BI Premium capacity assigned to this workspace.

Create Eventstream in Microoft Fabric

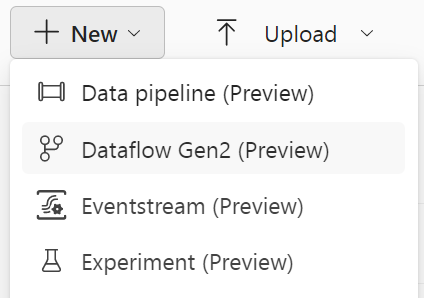

Within our Fabric Workspace, select NEW on the left upper corner and select Eventstream.

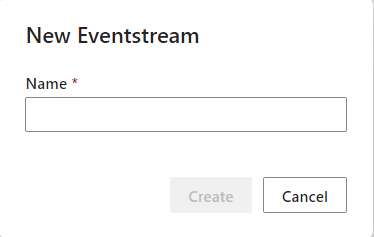

Define a name for the Evenstream and click on create.

This can take a couple of minutes to setup, but don’t worry there are a lot of things happening in the background. Microsoft Fabric is a SaaS application so things needs to be deployed for you.

The great advantage for you, things will much easier to setup.

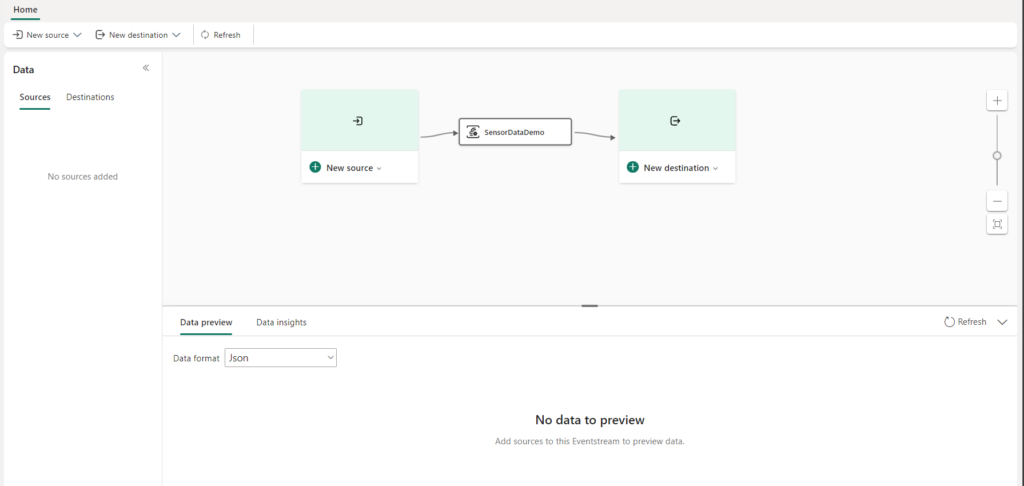

So once everything is ready you will see this new screen:

Create the Eventstream Source

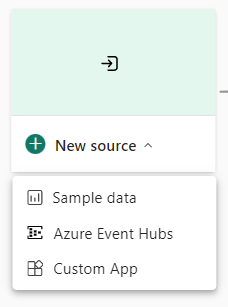

Next step is to connect our Source, in this case the connection to the Event Hub.

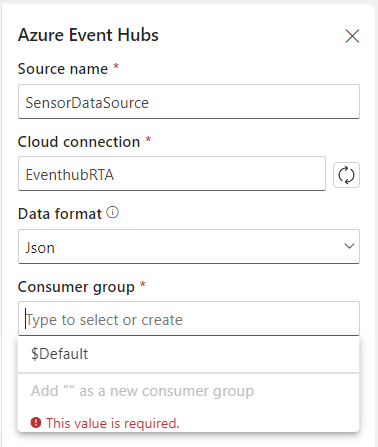

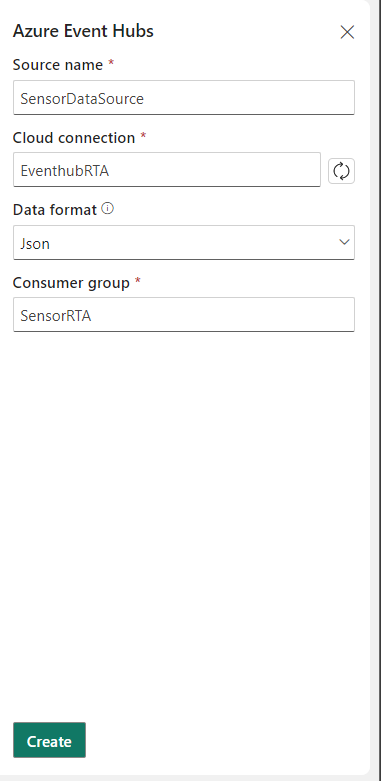

Select the Azure Event Hubs, a new pane will open.

| Source name | Define a name for your source, you can use the name of the Event Hub or a custom name |

| Cloud Connection | Select the connection you’ve created in the beginning of this blog |

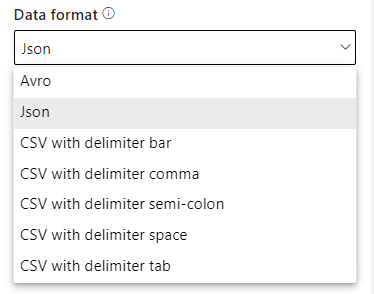

| Data Format |

Define the correct format based on your Event Stream

|

| Consumper group |

You can select a group you have a created in the beginning of this blog. Or you create a new one as well.

|

Note: Never you use an existing Consumer Group, because your current application connected to this Consumer Group will stop receiving data.

Once all the required field are filled in, click on Create. Now the source of your Eventstream will be created.

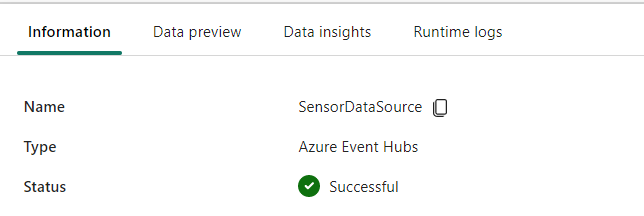

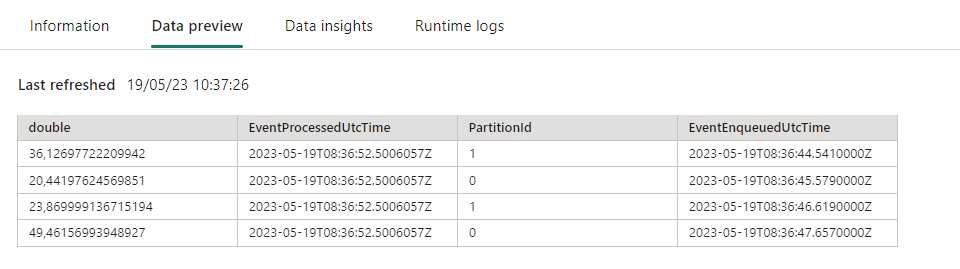

After the connection is setup successfully you can click on Data Preview, to see what kind of data is coming in and if this is the correct data.

If you data is not shown the correct way, you can change data format to csv or avro.

Destination

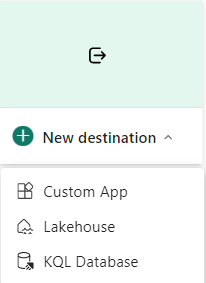

One of our last steps in our configuration is to setup the destination for the Eventstream.

In this blog we will use a Lakehouse(more destination are available), so that we can store our data and use it in a later stadium to build reports on top of the data.

Lakehouse

You can choose if you want to create a new Lakehouse or use an existing one.

If you do not have created a Lakehouse , you need to create one.

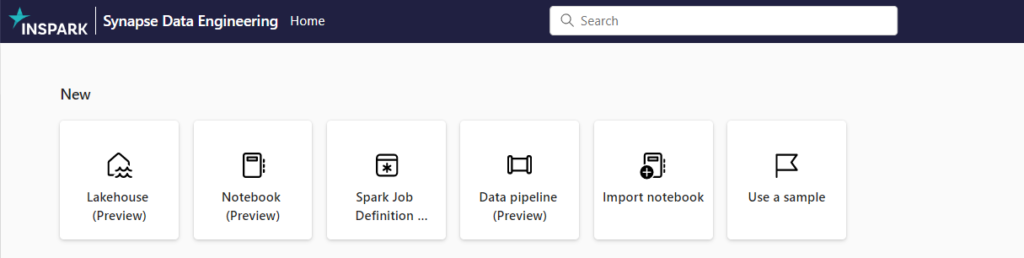

Select in left bottom corner, the option Data Engineering.

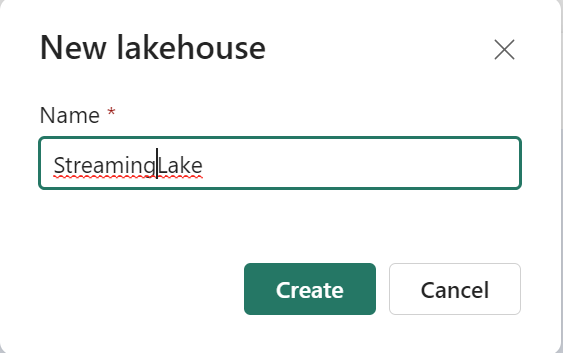

Create a New Lakehouse, define a name and click on create.

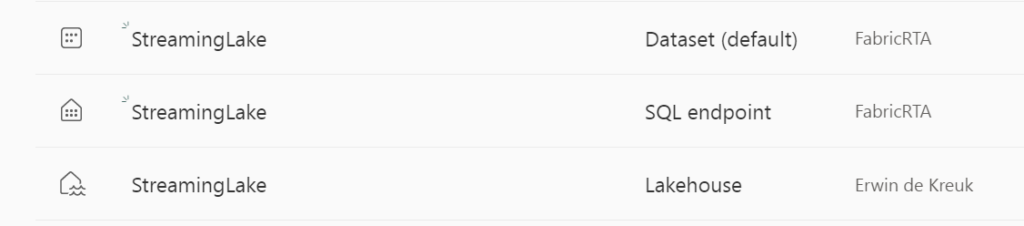

After creating a Lakehouse, you will see that Automatically a Dataset and a SQL Endpoint are created by default. How easy is that!

Create the Eventstream Destination

Create Lakehouse as Eventstream Destination

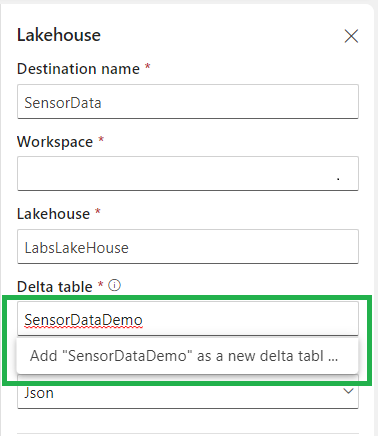

A new windows will open were we can configure the Lakehouse connection/destination.

| Destination Name | The name of the destination |

| Workspace | The workspace were you’re Lakehouse is located |

| Lakehouse | The Lakehouse you want to use(you can have more than 1 in the same workspace) |

| Delta table | The Delta Table were you want to store the data, you can also create a new table from here. |

| Data format | Mostly the same format as the data you added to in Source |

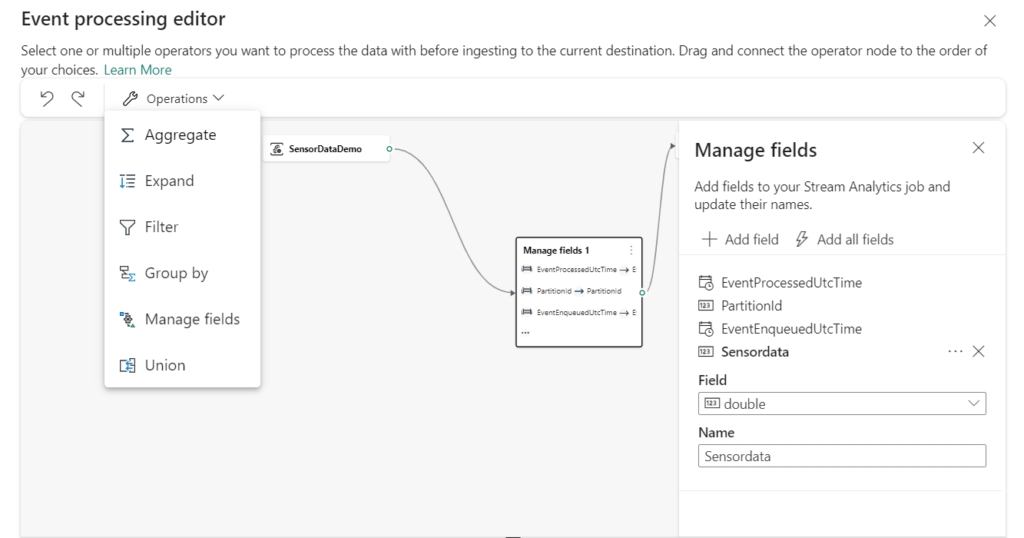

Event Processing

Before you create the destination, you can transform and preview the data that is being ingested for the destination with the Event Processor. The event processor editor is a no-code experience that provides you with the drag and drop experience to design the event data processing logic.

As you can see there’re a lot of operations/transformation possible to transform your data in a correct way, renaming a field is a matter of seconds with a no-code experience.

The last step is to create the destination. It is just as easy as it is, click on Create.

The Eventstream is ready, Source is streaming data and the destination is Ingesting data.

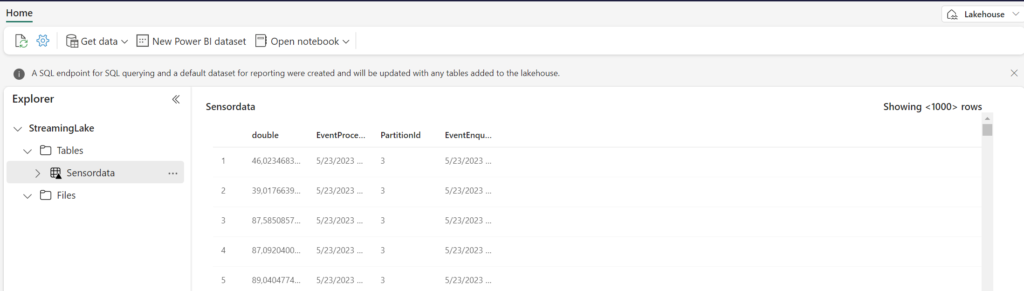

Navigate to your Lakehouse to verify the ingested data.

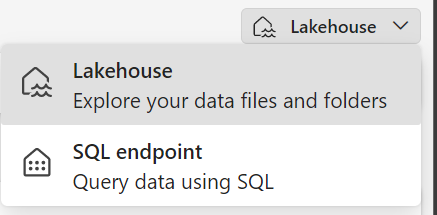

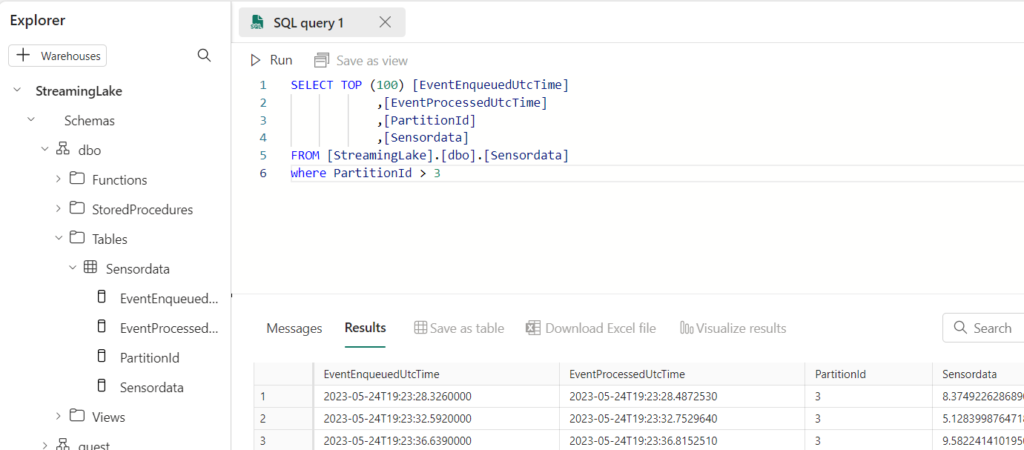

If you prefer to verify with a TSQL command, you can easily switch to a SQL Endpoint mode, which is located in the upper right corner.

And now you can run any type of query you want.

Next Steps

Build Power BI report with the ingested eventdata in the Lakehouse. As mentioned before a default dataset is already created.

In my next blog I will explain how we can start using the KQL database as a destination, so stay tuned.

Documentation

Click below to read more about Microsoft Fabric and Real-Time Analytics.

Microsoft Fabric Real -Time Analytics documentation

Exploring the Fabric technical documentation

Exploring the Fabric technical documentation

More information about Microsoft Fabric can be found at:

Microsoft Fabric Content Hub

Like always, I case you have some questions left, do hesitate to contact me.