Azure Data Factory: Generate Pipeline from the new Template Gallery

Azure Data Factory: Generate Pipeline from the new Template Gallery

Last week I mentioned that we could save a Pipeline to GIT. But today I found out that you can also create a Pipeline from a predefined Solution Template.

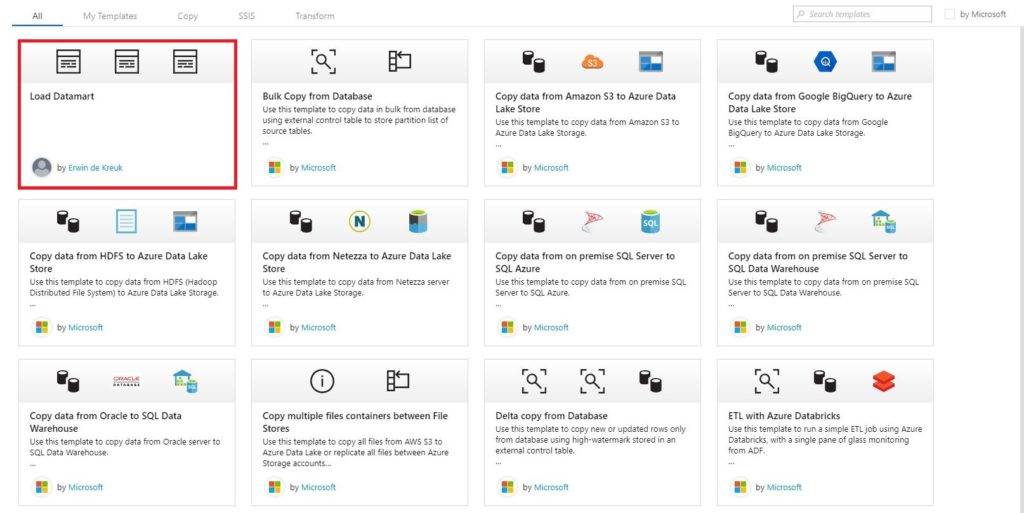

Template Gallery

These template will make it easier to start with Azure Data Factory and it will reduce development time when you start a new project.

Currently Microsoft has released the following templates:

Copy templates:

- Bulk copy from Database

- Copy multiple file containers between file-based stores

- Delta copy from Database

Copy from <source> to <destination>

- From Amazon S3 to Azure Data Lake Store Gen 2

- From Google Big Query to Azure Data Lake Store Gen 2

- From HDF to Azure Data Lake Store Gen 2

- From Netezza to Azure Data Lake Store Gen 1

- From SQL Server on premises to Azure SQL Database

- From SQL Server on premises to Azure SQL Data Warehouse

- From Oracle on premises to Azure SQL Data Warehouse

SSIS templates

- Schedule Azure-SSIS Integration Runtime to execute SSIS packages

Transform templates

- ETL with Azure Databricks

These templates can be found directly in the Azure Data Factory Portal:

Now you can select the option Create Pipeline from Template.

After selecting this option, all templates from the gallery but also the templates you saved yourselves, are visible.

Create a Pipeline from a Template

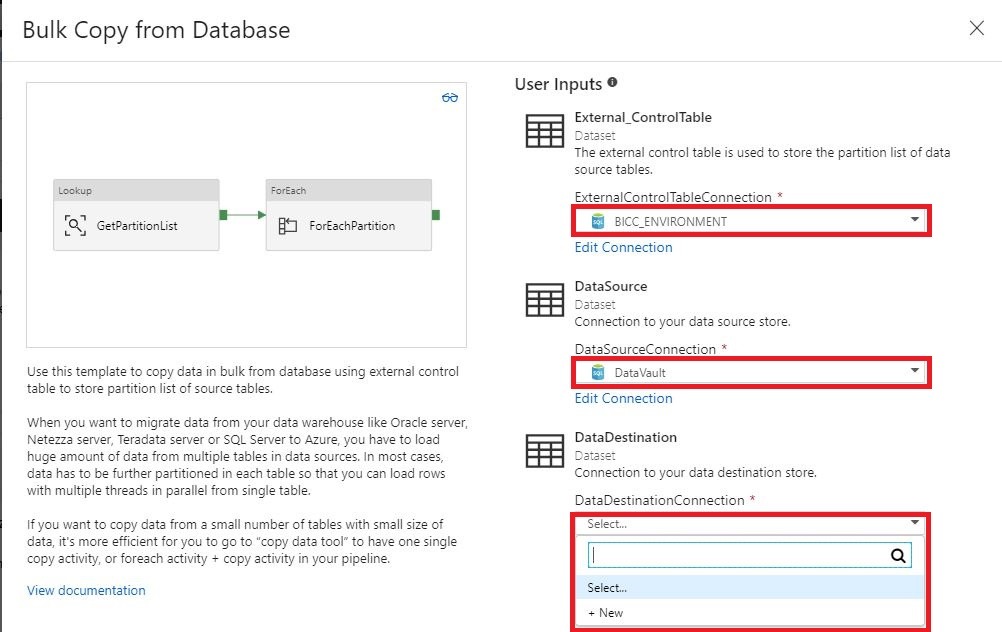

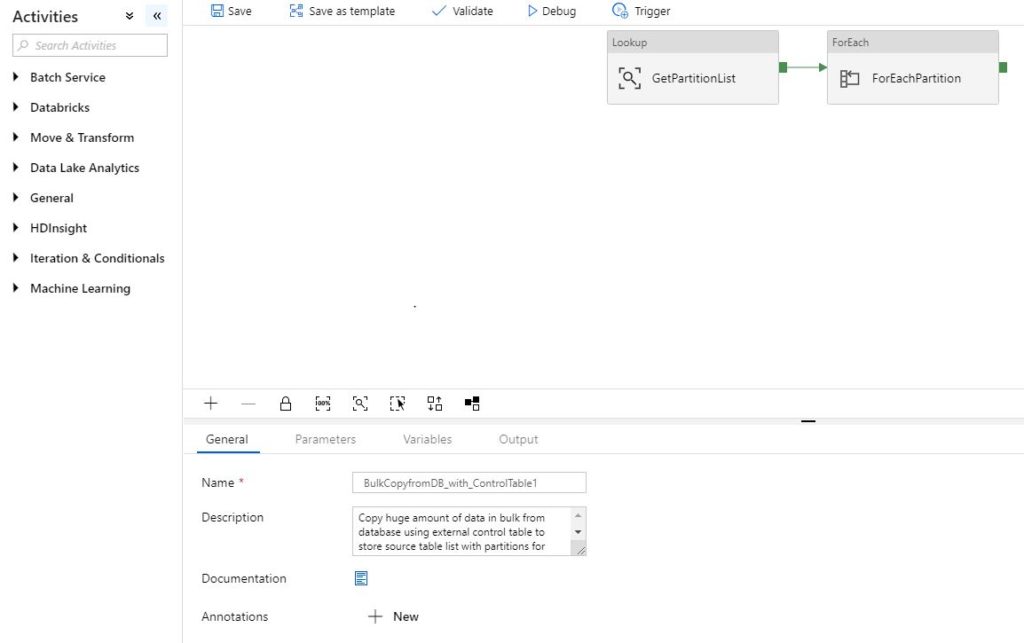

To start the creating of the template, click on the template you want to create. For this example, I have chosen for the template Bulk Copy from Database. A wizard will open which you have to follow.

The only thing you need to do right know is selecting the correct inputs. You can also create a new input from the Template wizard.

After selecting all the correct inputs you can finalize the template and the template will be added to your Factory.

Do you want to follow the detailed steps of creating this pipeline? The details can be found here.

Thanks so much for reading through this article today, and I hope you all take some time to try it out. It’s will make your life easier.

0 Comments