Provision users and groups from AAD to Azure Databricks (part 1)

Blog Serie: Provisioning identities from Azure Active Directory to Azure Databricks.

Instead of adding users and groups manual to your Azure Databricks environment, you can also sync them automatically from your Azure Active Directory to your Azure Databricks account with SCIM. This is one of the recommendations from Databricks.

Other advantages are:

- Stream less onboarding of new employees or teams in Azure Databricks.

- Users can be easily deleted from the Azure Databricks workspaces through the Azure Active Directory. This ensures a consistent offboarding process and prevents unauthorized users from accessing sensitive data.

Their are a couple of important requirements to have in place before we can start, you need to have or be:

- Azure Databricks account with a Premium Plan.

- Azure Databricks account admin to provision users to your Azure Databricks account using SCIM.

- Azure Databricks workspace admin to provision users to an Azure Databricks workspace using SCIM.

- Azure Active Directory account must be a Premium edition account to be able to provision groups.

- Provisioning of users is available for all Azure Active Directory editions (including the Azure AD Free)

Blog Serie

This blog post series contains the following topics, which I will post in the next few days:

- Configure the Enterprise Application(SCIM) for Azure Databricks Account Level provisioning

- Assign and Provision users and groups in the Enterprise Application(SCIM)

- Creating a metastore in your Azure Databricks account to assign an Azure Databricks Workspace

- Assign Users and groups to an Azure Databricks Workspace and define the correct entitlements

- Add Service Principals to your Azure Databricks account using the account console

- Configure the Enterprise Application(SCIM) for Azure Databricks Workspace provisioning

There are 2 different options to provision users and groups to Azure Databricks using Azure Active Directory (AAD) at the Azure Databricks account level or at the Azure Databricks workspace level. This post is related to the Azure Databricks Account Level.

Configure the Enterprise Application(SCIM) for Azure Databricks Account Level provisioning

Azure Databricks account level

Before you start login to the Azure Databricks account console.

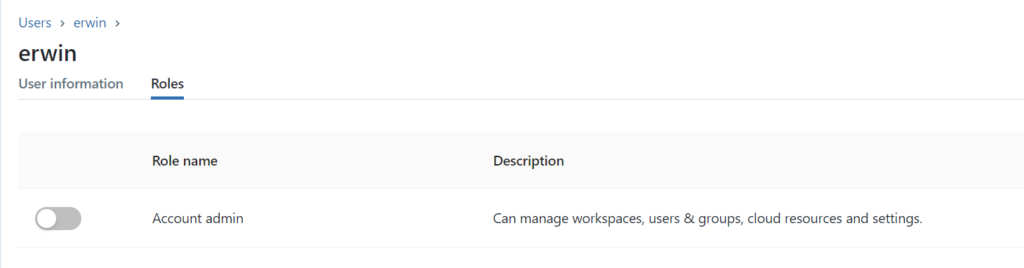

Make sure that you’re an Azure Databricks account admin. If you’re not an account admin, check who is an account admin( you see this on the main page of the user Management option). Ask the Account admin to grant you access, they can do this by clicking on the account name.

Once you’re Account Admin, click on the left side, click on the user setting icon(red).

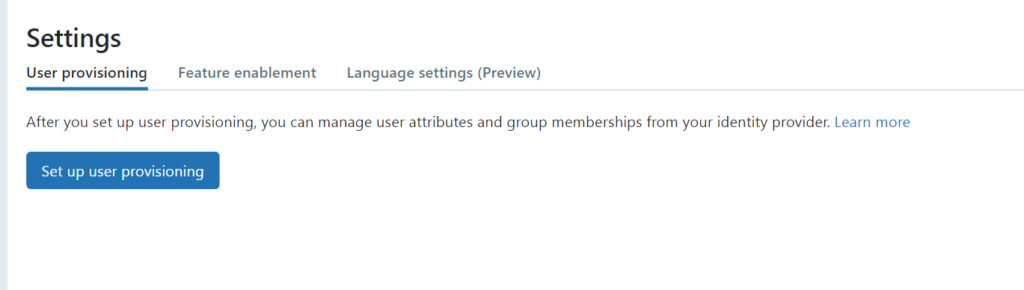

Click on User Provisioning and click on set-up user provisioning.

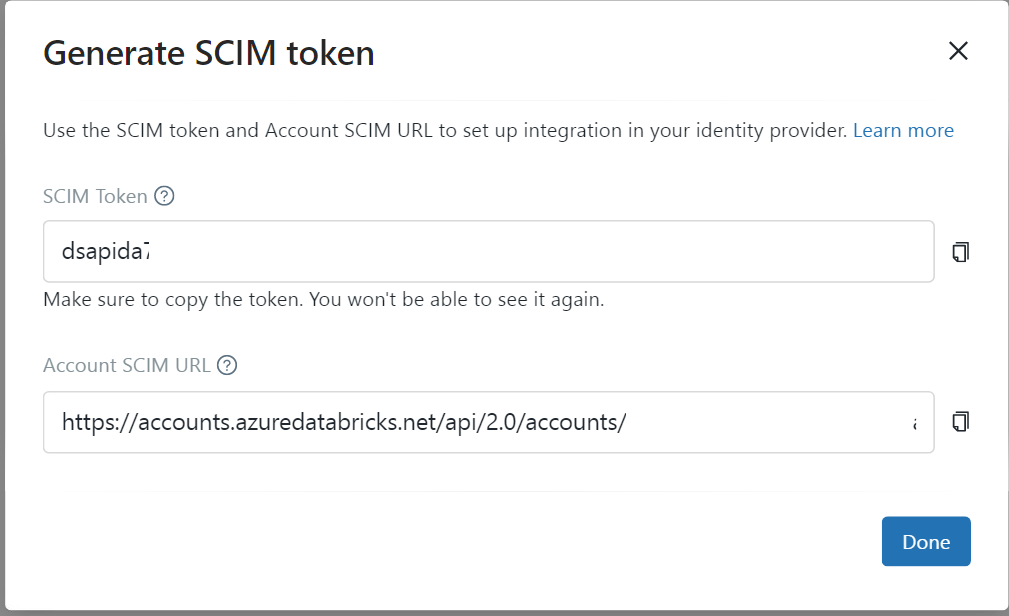

Copy the SCIM token and the Account SCIM URL and store them in an Azure Key Vault. We need these settings later to configure the Enterprise Application.

Configure the Enterprise Application

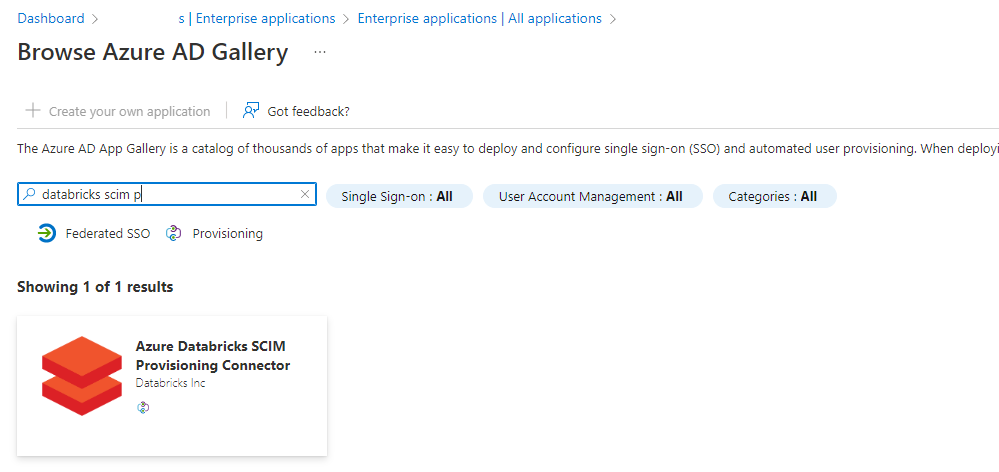

In the Azure portal, go to Azure Active Directory > Enterprise Applications.

Click on new application and search for the “Azure Databricks SCIM Provisioning Connector”

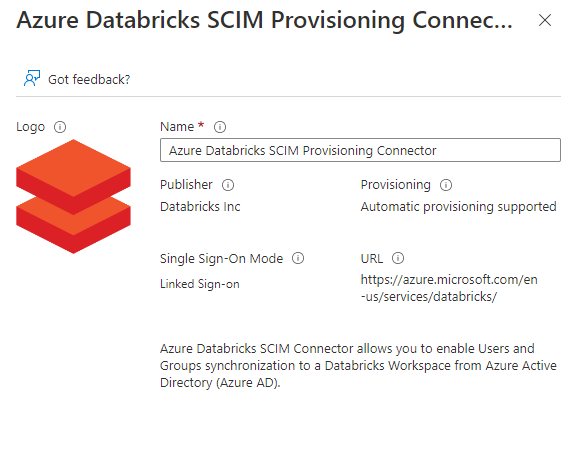

Click on the app:

Enter a Name for the application, I used Azure Databricks SCIM AzureDataBricksWestEurope

Click on Create and wait until the application is created.

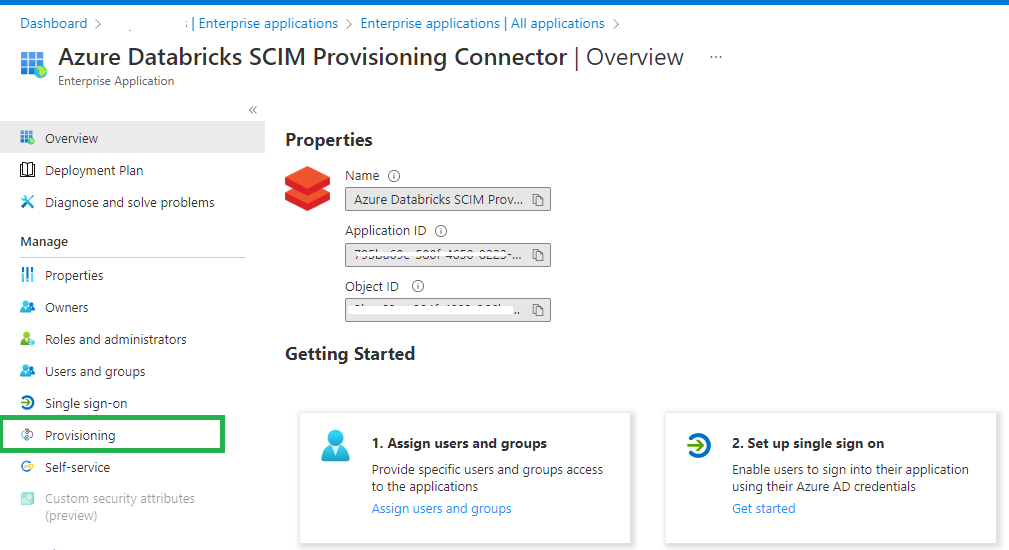

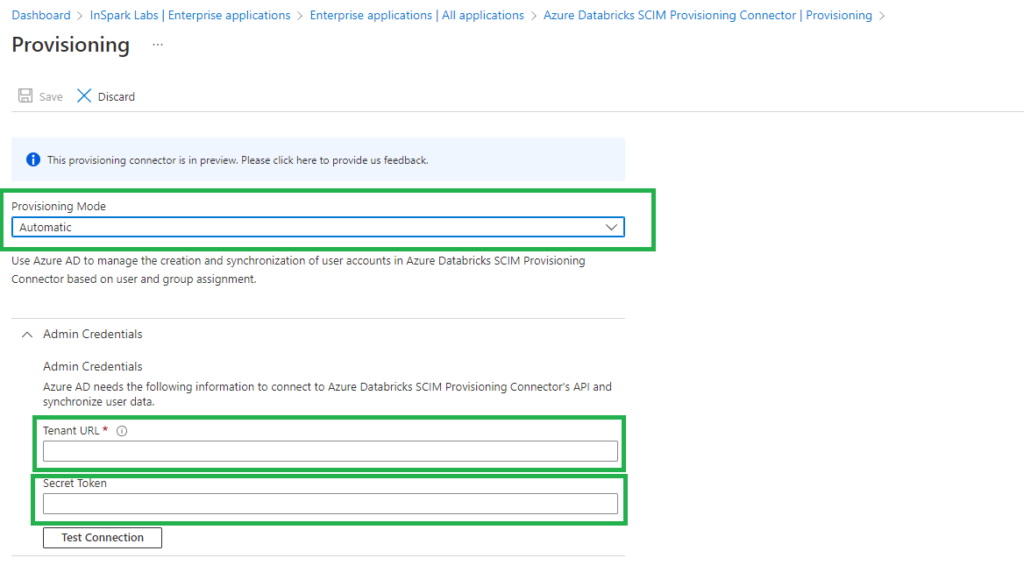

Click on Provisioning and set Provisioning Mode to Automatic.

Set the Tenant URL to the Account SCIM URL that we saved earlier in our Key Vault.

Set Secret Token to the Azure Databricks SCIM token that we generated and saved earlier in our Key Vault.

Click on Test Connection so see if everything is configured correctly.

In my next blog I will explain how to Assign and Provision users and groups in the Enterprise Application(SCIM).

hi, do you know if it can work with AD PIM Group? We are looking for to grant support user to access the production in short period time

Hi Victor,

I had this discussion with someone else today as well. PIM works, but the users are not deleted in Databricks because they are created as local users. I’ve put it on my to-do list to check it out. I’ll let you know when I’ve done that, but I have a full agenda at the moment