Provision users and groups from AAD to Azure Databricks (part 4)

Assign Users and groups to Azure Databricks Workspace

In the previous blog, you created the metastore in your Azure Databricks account to assign an Azure Databricks Workspace. In this blog, you will learn how to assign Users and Groups to an Azure Databricks Workspace and define the correct entitlements.

You need to assign the synced groups to your Azure Databricks workspace, this needs to be done for every workspace. That’s one of the reasons to create groups of users for every environment.

SG_DATABRICKS_USERS_DVLM: for the users which are allowed to use the Development environment.

SG_DATABRICKS_USERS_PROD: for the users which are allowed to use the Production environment.

SG_DATABRICKS_ACCOUNT_ADMIN: for the users which needs to be assigned the Account Admin role.

You can add the users in both groups, but this way you are already prepared for the future if you still want to separate the users from each other in a later stage.

Azure Databricks Workspace

Log in to your Workspace, in case you’re still logged in, in your account console, you can open the workspace directly from Data setting icon, on the left side.

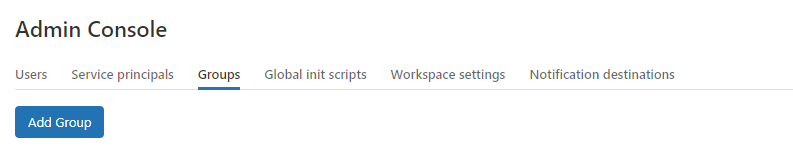

Once the Workspace is open, select the admin console in the upper right corner.

Select Groups

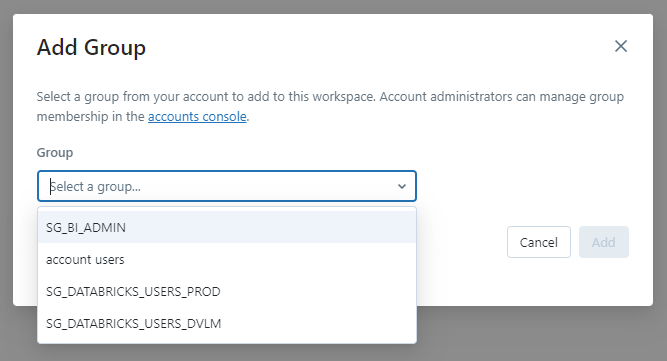

Select add Group.

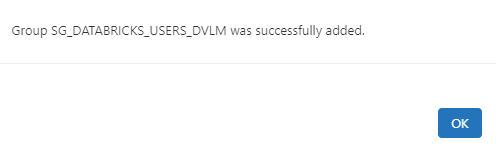

Select the groups you want to add one by one.

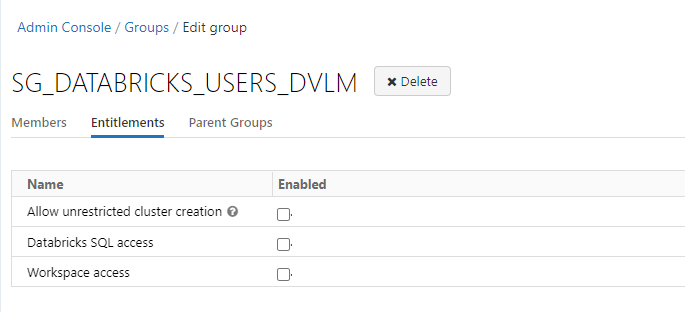

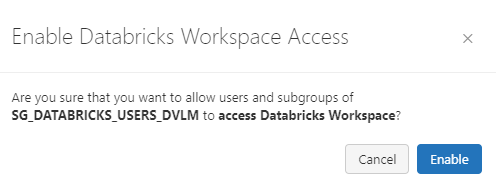

The groups are now visible and you can assign the correct entitlements to the group.

Workspace access:

- When granted to a user or service principal, they can access the Data Science & Engineering and Databricks Machine Learning persona-based environments.

- Can’t be removed from workspace admins.

Databricks SQL access:

- When granted to a user or service principal, they can access Databricks SQL.

Allow unrestricted cluster creation:

- When granted to a user or service principal, they can create clusters. You can restrict access to existing clusters using cluster-level permissions.

- Can’t be removed from workspace admins

Account admins are synced by default to all workspaces.

User added through a group do have separate icon displayed.

![]()

Please note that Databricks recommends that you assign group permissions to workspaces, instead assigning workspace permissions to users individually.

In my next blog I will explain how to Add Service Principals to your Azure Databricks account using the account console.

Other Blog post in this serie:

- Configure the Enterprise Application(SCIM) for Azure Databricks Account Level provisioning

- Assign and Provision users and groups in the Enterprise Application(SCIM)

- Creating a metastore in your Azure Databricks account to assign an Azure Databricks Workspace

- Assign Users and groups to an Azure Databricks Workspace and define the correct entitlements

- Add Service Principals to your Azure Databricks account using the account console

- Configure the Enterprise Application(SCIM) for Azure Databricks Workspace provisioning

0 Comments