Provision users and groups from AAD to Azure Databricks (part 3)

Creating a metastore in your Azure Databricks account

In the previous blog you learned how to sync and assign users and groups to the Enterprise Application. In this blog, you will learn how to create a metastore and assign it to Azure Databricks workspaces to. This is a prerequisite to be able to assign users and groups to the Azure Databricks workspaces.

In the situation below we’re creating a metastore that is accessed using a managed identity, which is recommended situation.

Before you can create a Metastore, you need to create an Azure Databricks access connector, which is a first-party Azure resource that lets you connect a system-assigned managed identity to an Azure Databricks account.

Requirements :

- You need to have an Azure Databricks account with a Premium Plan.

- You must be an Azure Databricks account admin.

- You must have an Azure Data Lake Storage Gen2 storage account(must be in the same region as your Azure Databricks Workspace).

Azure Databricks access connector

Log in to the Azure Portal as a Contributor or as an Owner of a resource group

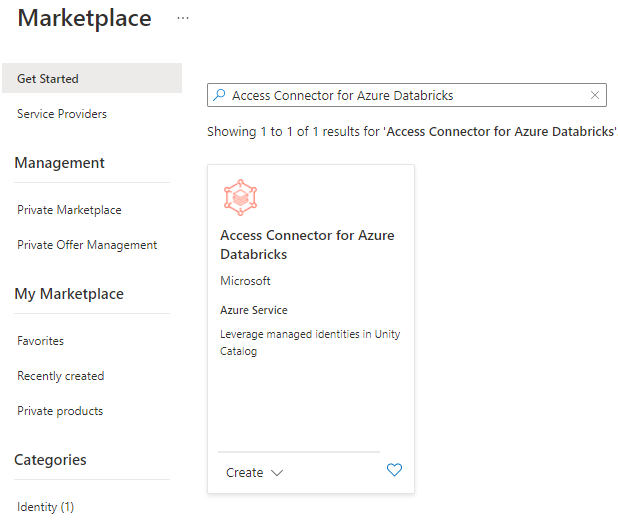

Search for the Access Connector for Azure Databricks in the Marketplace and click on create.

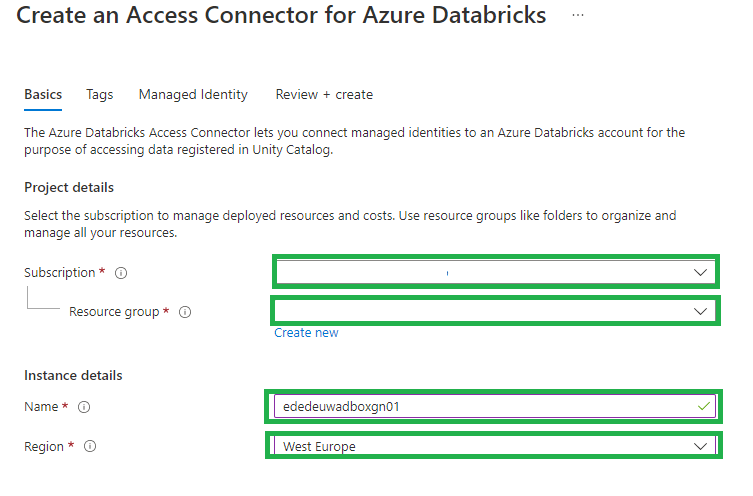

Configure the Connector

- Subscription: Select the subscription where you want to create the access connector in.

- Resource group: This should be a resource group in the same region as the storage account that you will connect to.

- Name: The name of the connector.

- Region: Same region as the storage account that you will connect to.

Click Review + create.

When you see the Validation Passed message, click Create.

Grant the managed identity access to the storage account

- Log in to your Azure Data Lake Storage Gen2 account as an Owner or a user with the User Access Administrator Azure RBAC role on the storage account.

- Go to Access Control (IAM), click + Add, and select Add role assignment.

- Select the Storage Blob Data Contributor role and click Next.

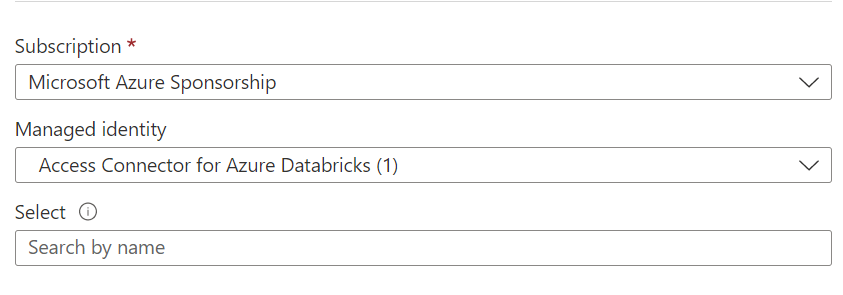

- Under Assign access to, select Managed identity.

- Click +Select Members, and select Access connector for Azure Databricks.

- Search for your connector name, select it, and click Review and Assign.

Create the Metastore

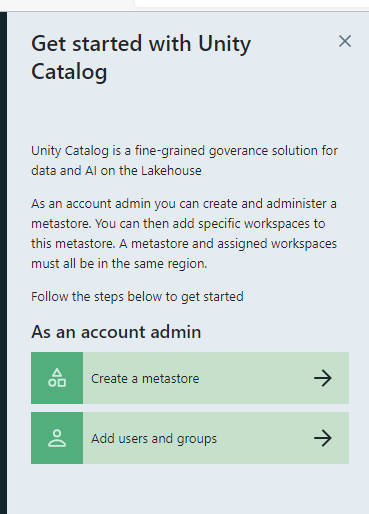

Login to the Azure Databricks account console.

Click on the left side, click on the data setting icon.

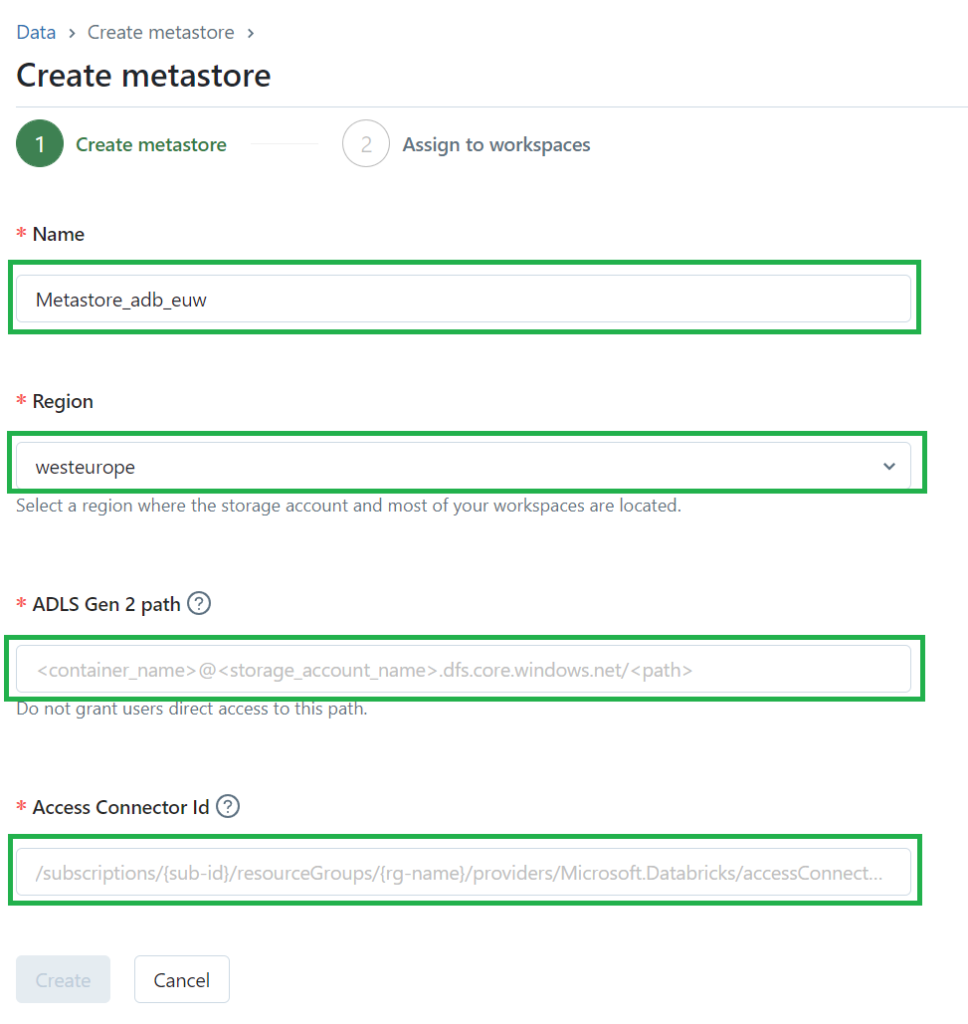

Click on the “Create a Metastore” button.

- Name for the metastore.

- Region where the metastore will be deployed., this must be the same region as the workspaces, storage and access connector.

- ADLS Gen 2 path: Enter the path to the storage container that you will use as root storage for the metastore.

- Access Connector ID: Enter the Azure Databricks access connector’s resource ID, can be found on the main page of the access connector.

Click on the Create to create the Metastore.

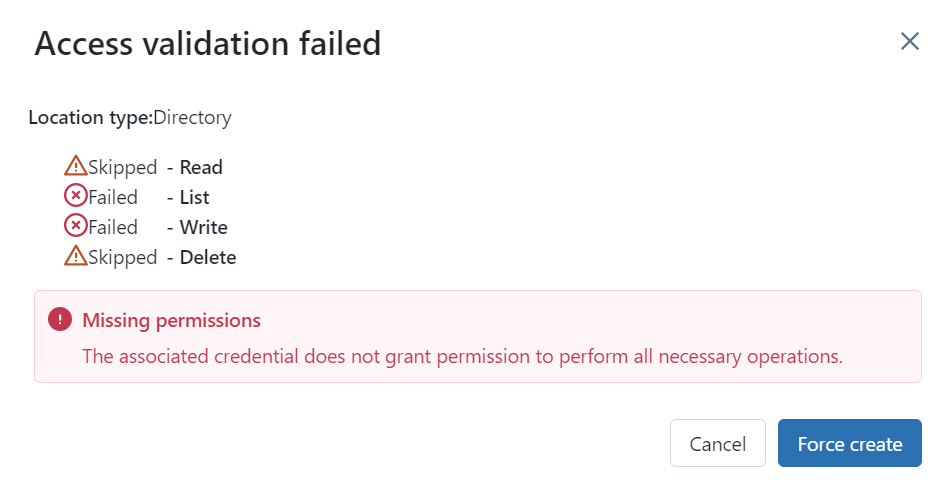

If you see the following error, you forgot to assign the managed identity access to the storage account. You can Force Create the metastore and assign the managed identity afterwards.

Assign Workspace to Metastore

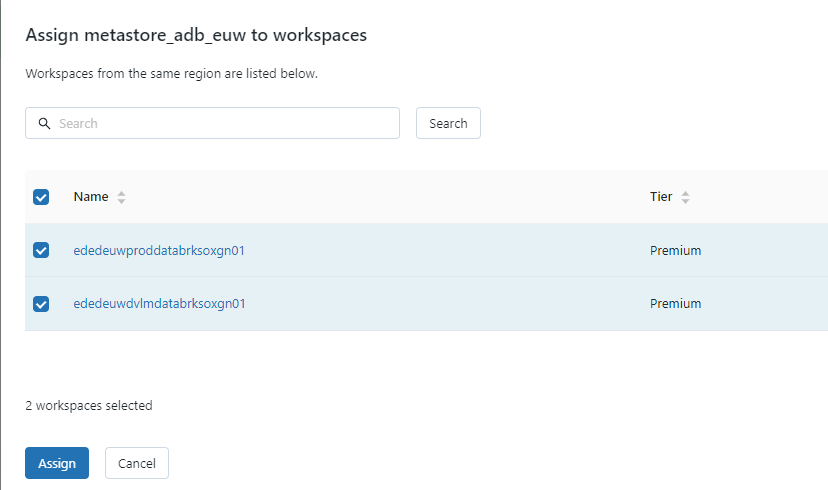

The last steps is to assign the Workspaces to the Metastore. Click on the right side Assign to workspace.

You will only see all workspaces which have not been assigned earlier. Select the correct workspace and click on assign.

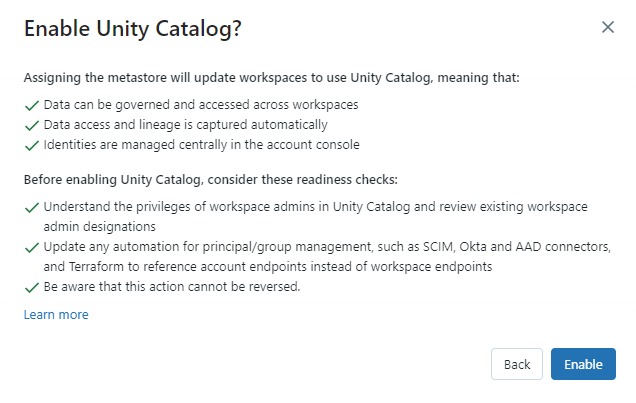

Enable the Unity Catalog and your workspaces are connected.

In my next blog I will explain how to Assign Users and groups to an Azure Databricks Workspace and define the correct entitlements.

Other Blog post in this serie:

- Configure the Enterprise Application(SCIM) for Azure Databricks Account Level provisioning

- Assign and Provision users and groups in the Enterprise Application(SCIM)

- Creating a metastore in your Azure Databricks account to assign an Azure Databricks Workspace

- Assign Users and groups to an Azure Databricks Workspace and define the correct entitlements

- Add Service Principals to your Azure Databricks account using the account console

- Configure the Enterprise Application(SCIM) for Azure Databricks Workspace provisioning

0 Comments